Sponsor: Do you build complex software systems? See how NServiceBus makes it easier to design, build, and manage software systems that use message queues to achieve loose coupling. Get started for free.

The Competing Consumers Pattern enables messages from Message Queues (or Topics) to be processed concurrently by multiple Consumers. This improves scalability, availability but also has some issues that you need to consider such as message ordering and moving the bottleneck.

YouTube

Check out my YouTube channel where I post all kinds of content that accompanies my posts including this video showing everything that is in this post.

Competing Consumers Pattern

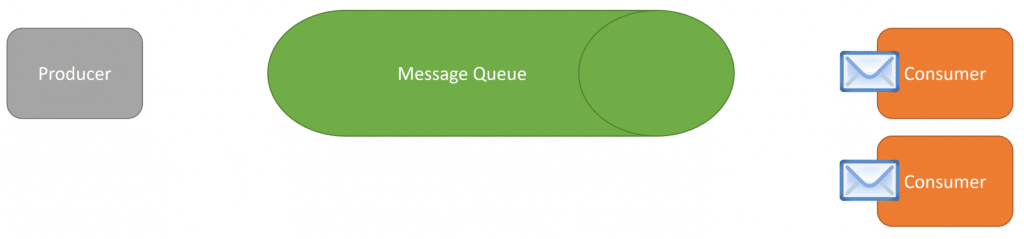

Message Queues are a great way to offload work to be that can be handled separately by another process. Meaning if you have a web application that takes an HTTP request, it can add a new message to the queue and then immediately return to the client. Since the work is being done asynchronously, the web application is non-blocking because the work is being done in another process.

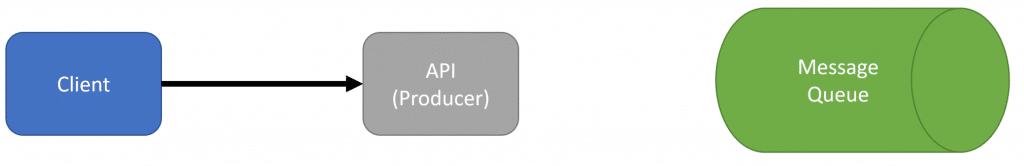

In this example, the client/browser makes an HTTP call to the web application.

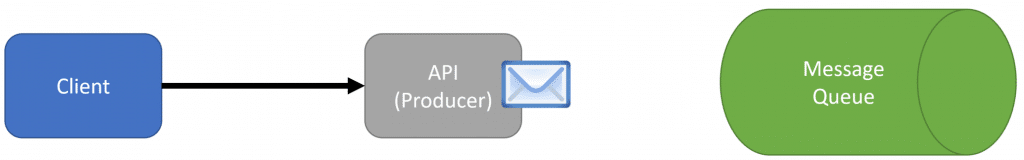

If the request itself can be done asynchronously and the client doesn’t need a consistent response, instead of performing the actual work, it creates a message.

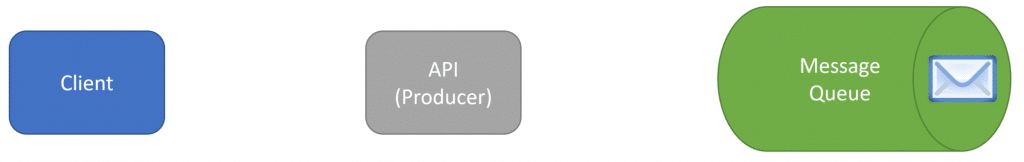

That message is then sent to the message queue from the API, which is our Producer.

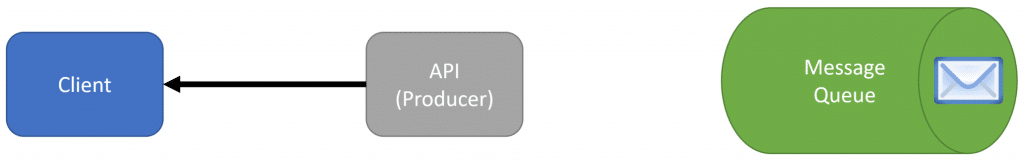

Then the API/Producer can return back to the client.

The actual work to be done will be handled by a Consumer. A consumer will get the message from the Message Queue and perform the work required.

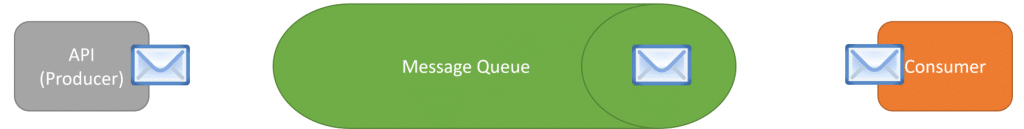

As the consumer is processing the message, our API could still be creating new messages from HTTP Requests.

And producing more and more messages that are being put into the queue.

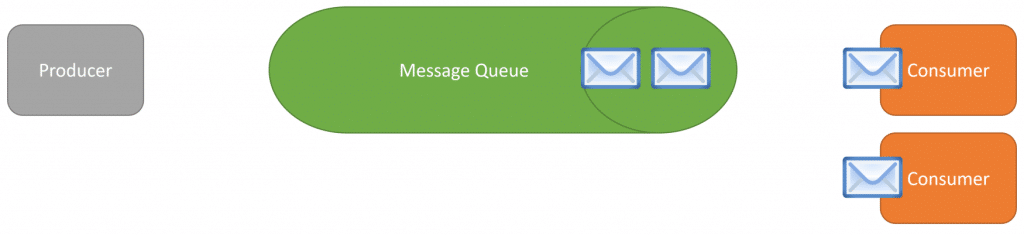

At this point, we have 3 messages in the queue. Once the consumer is done processing the message, it will go and grab the next message in the queue to process.

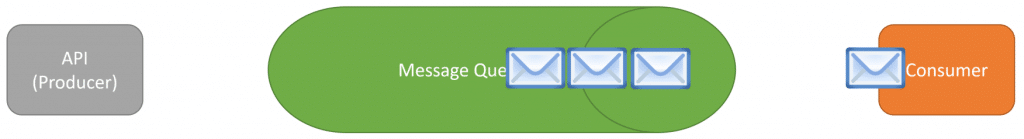

In this illustration, the rate at which we are producing messages and adding them to the queue exceeds how fast we can consume messages.

This may or not may be an issue depending on how quickly these messages need to be processed. If they are time-sensitive then we must increase our throughput.

To do that is simply to increase the number of consumers that are processing messages off the queue.

This is called the Competing Consumers Pattern because each consumer is competing for the next message in the queue.

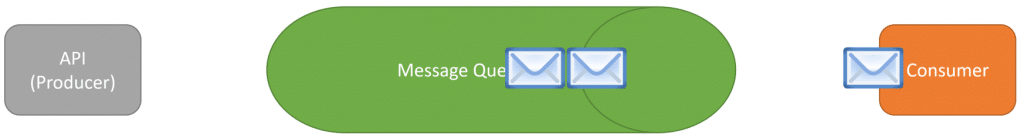

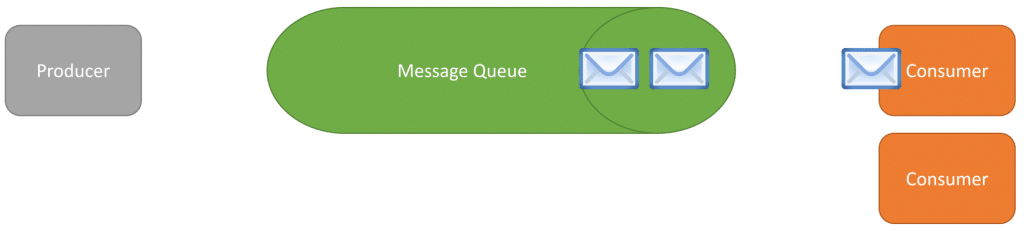

If we have two Consumers that are both busy processing a message, and two messages waiting in the queue.

The moment one of the consumers finishes processing it’s message, it will get the next message in the queue.

Since the second consumer is free, it now gets the next message in the queue.

Increasing Throughput

The Competing Consumers Pattern allows you to horizontally scale by adding more consumers to process more messages concurrently. By increasing the number of consumers you will increase throughput and improve scalability and availability to manage the length of the queue.

Because the number of messages can fluctuate in most systems, you may see more messages during business hours than after hours. This allows you to scale the number of consumers and the resources they use. At peak hours you increase the number of deployed Consumers and off-peak hours you can reduce the number of consumers deployed.

Moving the Bottleneck

There are a couple issues with Competing Consumers Pattern and the first is moving the bottle neck.

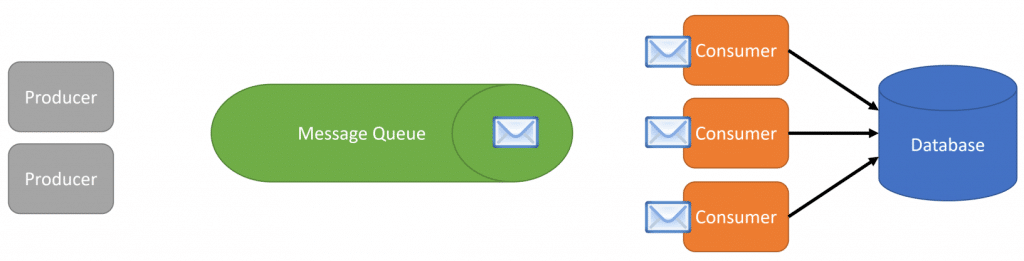

If you have a lot of messages in the queue and you add more consumers to try and increase throughput, this means you’re going to be processing more work concurrently. This can have a negative effect downstream to any resources that are used in processing messages.

For example, if the messages being processed involved interacting with a database, you’ve moved the bottle neck to the database.

Any resources (database, caches, services) will all feel the effects of adding more consumers and processing more messages concurrently. Beware of moving the bottleneck that downstream resources can handle the increased load.

Message Ordering

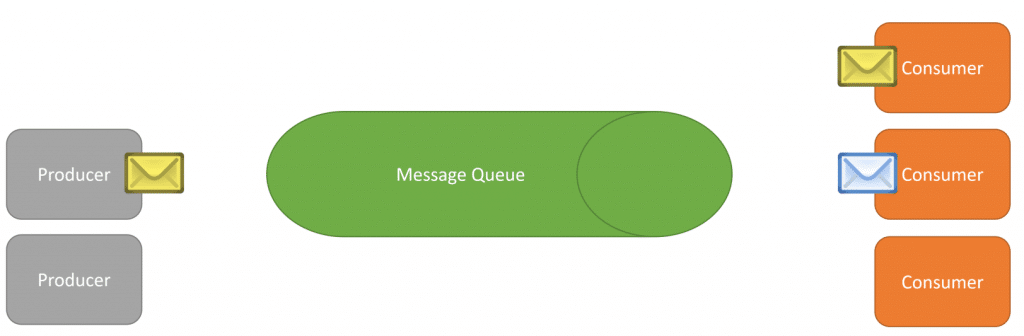

The second common issue with the Competing Consumers Pattern is the expectation of processing messages in a particular order. For example, if the Producer is creating messages based on workflow the client is doing, then the messages are created in a particular order and added to the queue in a particular order.

Most message brokers support FIFO (First In First Out), which means that consumers will take the oldest message available to be processed.

This does not mean however that you won’t have correlated messages being processed at the same time.

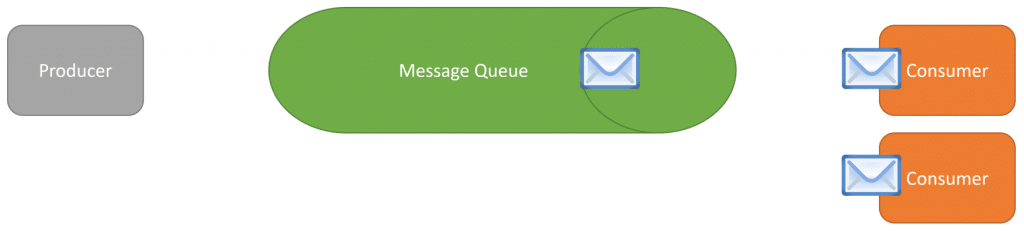

Here’s an example of a yellow message being processed by a consumer.

If the producer produces another message that relates to the first message, and there are consumers available to process it, they will.

This can have negative consequences to your system if you’re expecting to process messages sequentially in a particular order. Although you will receive messages in order, that does not mean you will process them sequentially in a particular order because you’re processing messages concurrently.

Source Code

Developer-level members of my CodeOpinion YouTube channel get access to the full source for any working demo application that I post on my blog or YouTube. Check out the membership for more info.